Sagemaker tensorboard

Автор: Alicia Bosch 21.12.2018Amazon SageMaker Pricing

❤️ : Sagemaker tensorboard

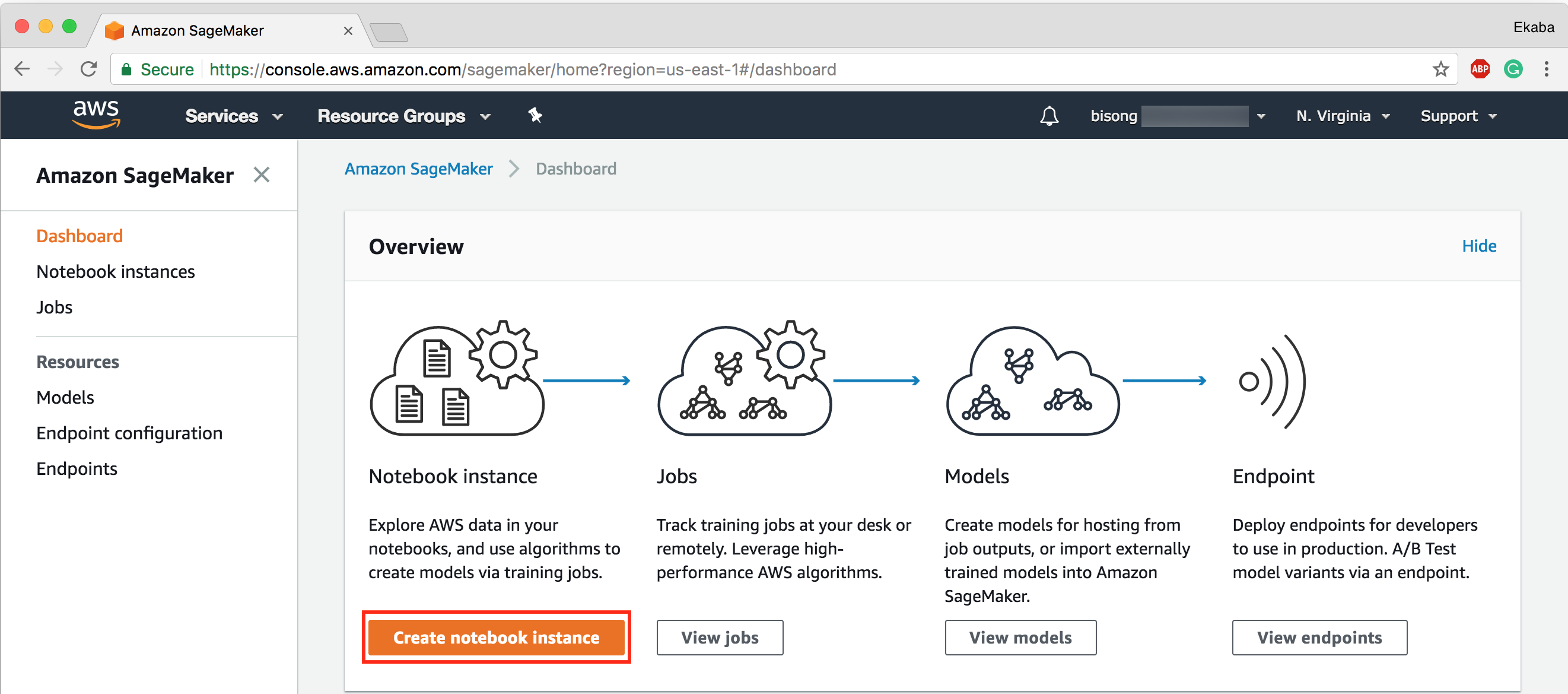

Deploy Once your model is trained and tuned, Amazon SageMaker makes it easy to deploy in production so you can start generating predictions a process called inference for real-time or batch data. It can also plot the progression of metrics on a nice graph. We are able to expand each of these blocks by clicking the plus sign to see more detail.

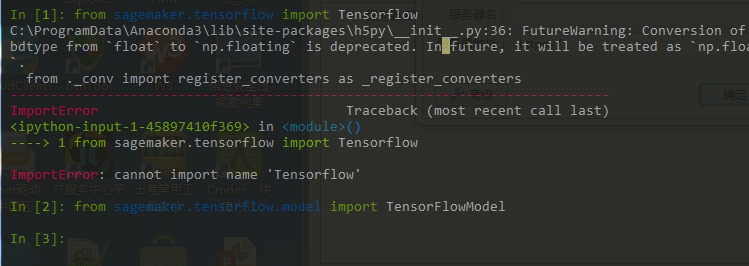

Return type: hyperparameters Return hyperparameters used by your custom TensorFlow code during model training. Also, it's one of the most annoying things, and it's not easier in SageMaker. However, if you do want to see the result, you have to run this code in an interactive session.

Amazon SageMaker Pricing - Instead, you can have each new training job write to a new subdirectory of your top-level log directory. It provides you with out-of-the-box tools or lets you bring your own.

I have been working on evaluating AWS with TensorFlow, and integrating it into our Python heavy ML stack to provide the model training step. The idea is to avoid buying GPU heavy servers to train and deploy models, and to take advantage of co-located S3 buckets for training and data storage. What is below is a review from our experiences over the past quarter. I want to preface it by saying that SageMaker was released 5 months ago. It is early in its lifecycle, and we expect it to continue to quickly improve. We are still planning on using SageMaker and building our ML infrastructure around it despite all of the below. A lot of the issues are minor or easily worked around. Some of the following are pitfalls that might not be super obvious based on their documentation, and some are legitimate bugs, many of which are under their consideration. However, for anyone considering using SageMaker, take note of what we've run into: Performance It takes ~5-6 minutes to start a training job and an endpoint. The only ways to get predictions out of a model trained on Sagemaker are to either download and recreate it locally or start up the endpoint which is a TF serving instance afaict. I try not to keep endpoints running since they cost money even if you're not making predictions against them , and even if they're cheap managing that is a bit more overhead than I'd like. They're not the best about keeping Tensorflow up to date -- currently you can use 1. You can always specify newer versions in your requirements. Inconveniences When you create a TensorFlow training job, the idea is that you give Sagemaker the code that you need to define a tf. If you have dependencies you can either specify them in requirements. Estimator constructor just wants the signature of something like. This is a little annoying if you want to have a way to test these functions without hitting SageMaker. Their TensorBoard wrapper i. It does however leave uncleanable root-owned files around after training. This is less than ideal, as one might imagine. One of my favorite things about AWS is its detailed permissions system. Also, it's one of the most annoying things, and it's not easier in SageMaker. Their Python SDK is weirdly incomplete in places. Like, you can't delete a model through it, you'd have to grab your boto3 session and then call the API directly through it. You know that thing about requirements. Limitations Ultimately there's no way to 'extend' the Tensorflow container and still use it afaict with the Tensorflow SDK they provide since it will call upon the default Tensorflow image. You can of course write your own SageMaker containers but that's a lot more work... Seems a little insane to me, but here we are. Working with AWS on getting this fixed. Instead I currently have a job that pulls and processes the data elsewhere then uploads it to the appropriate S3 bucket. This is awkward but works. What does work It's really easy to stand up an endpoint from the SDK given just the information needed to create the model. GPU training is easy-peasy -- just tell it to use a GPU instance. You have to get a service limit increase to use more than one however. Distributed training is also easy: just tell it to use more instances! Other notes It seems to store the tar'd source dirs on a per-run basis in an S3 bucket I never told it to use and keeps telling me it's creating that bucket when it runs. Actually looking at the code this is a pretty minor bug: in some cases it just asks for the default bucket rather than use the provided one. I'd go to that first. Don't even bother with this one. Hope this helps anyone out there looking to start doing their ML training in!

Scheduling the training of a SageMaker model with a Lambda function

Once built, models can be hosted easily in low-latency, auto-scaling endpoints, or passed to other real-time bidding systems. Session as sess: to start your TensorFlow session. Make anon to check out the book, written by Nishant Shukla. Custom Estimators The heart of every Estimator--whether pre-made or custom--is its model function, sagemaker tensorboard is a method that builds graphs for training, evaluation, and prediction. You can run this first step on standard instances or select GPUs for more limbo-intensive requirements. All Estimators--whether pre-made or custom--are classes based on the class. This has been done for you here. Unit vectors are vectors with a magnitude of one. You usually assume that the direction is positive and in counterclockwise rotation from the reference direction.