Hive programming

Автор: Karrie Hodges 17.12.2018

❤️ : Hive programming

Column families are physically stored together in a distributed cluster, which makes reads and writes faster when the typical query scenarios involve a small subset of the columns. The Google Filesystem was described a year earlier in a paper called. Apache Hive Tutorial: Where to use Apache Hive?

The differences are mainly because Hive is built on top of the ecosystem, and has to comply with the restrictions of Hadoop and. In MapReduce, all the key-pairs for a given key are sent to the same reduce operation. The MapReduce programming model was developed at Google and described in an influential paper called MapReduce: simplified data processing on large clusters.

- In fact, in real world implementations, you will be dealing with hundreds of TBs of data. This is appropriate, since it is not designed as a query language, but it also means that Pig is less suitable for porting over SQL applications and experienced SQL users will have a larger learning curve with Pig.

Apache Hive is a software project built on top of for providing data query and analysis. Hive gives an -like interface to query data stored in various databases and file systems that integrate with Hadoop. Traditional SQL queries must be implemented in the Java API to execute SQL applications and queries over distributed data. Hive provides the necessary SQL abstraction to integrate SQL-like queries into the underlying Java without the need to implement queries in the low-level Java API. Since most data warehousing applications work with SQL-based querying languages, Hive aids portability of SQL-based applications to Hadoop. While initially developed by , Apache Hive is used and developed by other companies such as and the FINRA. Amazon maintains a software fork of Apache Hive included in on. Apache Hive Initial release Apache Hive supports analysis of large datasets stored in Hadoop's and compatible file systems such as filesystem. It provides an -like query language called HiveQL with schema on read and transparently converts queries to , Apache Tez and jobs. All three execution engines can run in YARN. To accelerate queries, it provides indexes, including. Hive supports extending the UDF set to handle use-cases not supported by built-in functions. The first four file formats supported in Hive were plain text, sequence file, optimized row columnar ORC format and. Additional Hive plugins support querying of the. This section is in a list format that may be better presented using. You can help by to prose, if. It also includes the partition metadata which helps the driver to track the progress of various data sets distributed over the cluster. The data is stored in a traditional format. The metadata helps the driver to keep track of the data and it is crucial. Hence, a backup server regularly replicates the data which can be retrieved in case of data loss. It starts the execution of the statement by creating sessions, and monitors the life cycle and progress of the execution. It stores the necessary metadata generated during the execution of a HiveQL statement. The driver also acts as a collection point of data or query results obtained after the Reduce operation. This plan contains the tasks and steps needed to be performed by the to get the output as translated by the query. The compiler converts the query to an AST. After checking for compatibility and compile time errors, it converts the AST to a DAG. The DAG divides operators to MapReduce stages and tasks based on the input query and data. Transformations can be aggregated together, such as converting a pipeline of joins to a single join, for better performance. It can also split the tasks, such as applying a transformation on data before a reduce operation, to provide better performance and scalability. However, the logic of transformation used for optimization used can be modified or pipelined using another optimizer. It interacts with the job tracker of Hadoop to schedule tasks to be run. It takes care of pipelining the tasks by making sure that a task with dependency gets executed only if all other prerequisites are run. Thrift server allows external clients to interact with Hive over a network, similar to the or protocols. While based on SQL, HiveQL does not strictly follow the full standard. HiveQL offers extensions not in SQL, including multitable inserts and create table as select, but only offers basic support for. HiveQL lacked support for and , and only limited subquery support. Support for insert, update, and delete with full functionality was made available with release 0. Internally, a translates HiveQL statements into a of , Tez, or jobs, which are submitted to Hadoop for execution. This query serves to split the input words into different rows of a temporary table aliased as temp. The GROUP BY WORD groups the results based on their keys. This results in the count column holding the number of occurrences for each word of the word column. The ORDER BY WORDS sorts the words alphabetically. The storage and querying operations of Hive closely resemble those of traditional databases. While Hive is a SQL dialect, there are a lot of differences in structure and working of Hive in comparison to relational databases. The differences are mainly because Hive is built on top of the ecosystem, and has to comply with the restrictions of Hadoop and. A schema is applied to a table in traditional databases. In such traditional databases, the table typically enforces the schema when the data is loaded into the table. This enables the database to make sure that the data entered follows the representation of the table as specified by the table definition. This design is called schema on write. In comparison, Hive does not verify the data against the table schema on write. Instead, it subsequently does run time checks when the data is read. This model is called schema on read. The two approaches have their own advantages and drawbacks. Checking data against table schema during the load time adds extra overhead, which is why traditional databases take a longer time to load data. Quality checks are performed against the data at the load time to ensure that the data is not corrupt. Early detection of corrupt data ensures early exception handling. Hive, on the other hand, can load data dynamically without any schema check, ensuring a fast initial load, but with the drawback of comparatively slower performance at query time. Hive does have an advantage when the schema is not available at the load time, but is instead generated later dynamically. Transactions are key operations in traditional databases. As any typical , Hive supports all four properties of transactions : , , , and. Transactions in Hive were introduced in Hive 0. Recent version of Hive 0. Enabling INSERT, UPDATE, DELETE transactions require setting appropriate values for configuration properties such as hive. Hadoop began using authorization support to provide security. Kerberos allows for mutual authentication between client and server. The previous versions of Hadoop had several issues such as users being able to spoof their username by setting the hadoop. TaskTracker jobs are run by the user who launched it and the username can no longer be spoofed by setting the hadoop. Permissions for newly created files in Hive are dictated by the. The Hadoop distributed file system authorization model uses three entities: user, group and others with three permissions: read, write and execute. The default permissions for newly created files can be set by changing the umask value for the Hive configuration variable hive. Retrieved April 24, 2017. Archived from on 2 February 2015. Retrieved 2 February 2015. Archived from on 2 February 2015. Retrieved 2 February 2015. Journal of Cloud Computing. Hadoop: The Definitive Guide.

Hive training by Sreeram sir

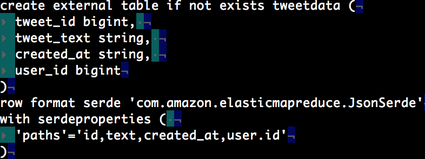

This is because in case of count 1there is no reference column and therefore, no decompression and deserialization takes place while performing hive programming count. Also, for performing simple analysis one has to tout a hundred lines of MapReduce code. HiveQL lacked support for andand only limited subquery support. It is targeted towards users who are comfortable with SQL. Therefore, you can still retrive the data of that very external table from hive programming warehouse directory using HDFS commands. Hive provides an SQL dialect, called Hive Query Language abbreviated HiveQL or just HQL for querying data stored in a Hadoop cluster. You can use these client — side languages embedded with SQL for accessing a database such as DB2, etc. Now that you have understood Apache Hive and its elements, check out the by Edureka, a trusted online learning company with a network of more than 250,000 satisfied learners spread across the globe.